The other day, someone complained that ChatGPT did not follow his instructions.

Ansh Agrawal: Is it just me, or has ChatGPT become worse at following instructions? I told it to do X, and it did Y. I cleared out the instructions and asked if it understood them. It said yes. But, it did the same thing again, doing Y instead of X.

I replied, tongue in cheek, as follows:

AI is supposed to mimic humans. How many humans follow your instructions at the first attempt?

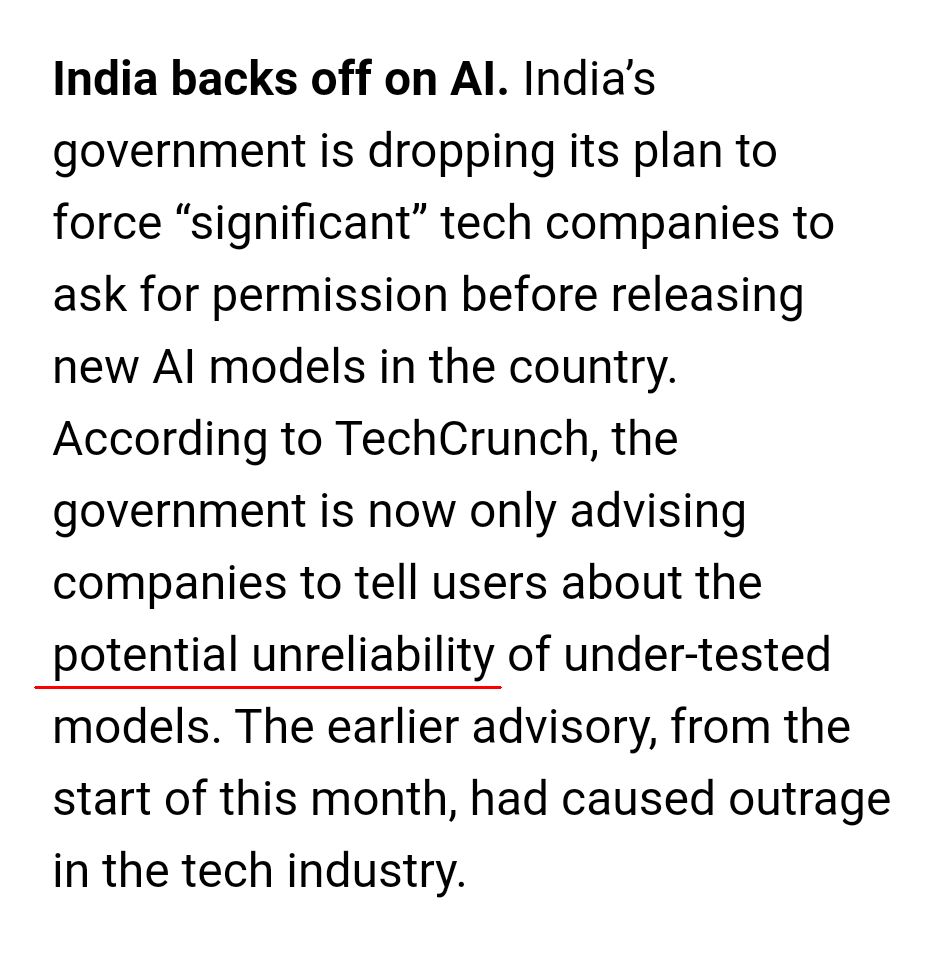

Unreliability is an inherent trait of AI. Since it has not yet sunk in, it’s causing all kinds of angst around hallucination etc. In this post, I’ll try to minimize the culture shock that it’s likely to cause.

Common man has always viewed technology as a tool that reduces human arbitrariness and improve discipline. His perspective was more-or-less right during the past tech waves. Perceiving AI as sophisticated and cutting-edge technology, J6P (short for Joe Six Pack or common man) now believes that it will do an even better job of reducing human arbitrariness and improving discipline than the previous technologies did.

His expectation will be belied because the fundamental thing about AI is that it’s an artificial way to behave like humans. Therefore, it’s only natural that AI might go in the opposite direction and behave unreliably.

Anybody who has used ChatGPT would know that it gives different answers to the same question at different times. As @jeremyakahn notes in his FORTUNE magazine Eye for AI newsletter:

(GenAI) technology is bewildering, producing results that can seem magical and brilliant one moment and inept and dangerously wrong the next.

Since it’s a probabilistic system, lack of reliability is a feature, not a bug, of AI. This is particularly true for GenAI (short for Generative Artificial Intelligence), the sub-category of AI that GPT and ChatGPT belong to.

My B.Tech Project at IIT Bombay was on Stochastic Modeling and Simulation of Fluidized Bed Reactor Regenerator System. A fluidized bed reactor-regenerator is a canonical example of a stochastic system in chemical engineering. It did not behave reliably at the time (mid-80s). It does not behave reliably today.

A stochastic aka probabilistic system is non-deterministic. This is in contrast to a deterministic system.

An everyday example of a determistic vs. non-deterministic system is password vs. biometrics for accessing laptop, phone, and other devices.

A password is either right or wrong. However, biometrics operates in gray area.

As I highlighted in Hardware Matters,

Biometric authentication is a probabilistic process that runs at a predefined confidence level. While fingerprint, iris, voice, and other biometric attributes are unique to an individual, biometric authentication is not as foolproof as commonly believed. A fingerprint scanner captures the user’s fingerprint and transfers it to the authentication software. The software extracts 8 to 16 features from the raw print and compares them with the corresponding 8-16 features of the master. In some jurisdications (e.g. USA), even if just eight features match (“8 point match”), the two prints are judged the same; whereas in other jurisdictions (e.g. UK), the matching threshold is “16 point match” i.e. all 16 features need to match (Source: Biometric fingerprint scanners). More the number of matching points, greater is the reliability of authentication. However, more also is the amount of raw data required from the fingerprint scanner.

Ergo reliability of a biometrics system depends on the specs and quality of hardware one uses to capture the biometrics such as fingerprint.

I’ve noticed that my fingerprint works first time on my tablet, only after 3-4 attempts on my laptop, and never on my smartphone. The wide variance in performance is probably due to the difference in quality of fingerprint scanners used in those devices.

As an aside, I’ve observed a big difference in the reliability of biometrics based on who supplies the readers and scanners.

CENTRALIZED VERSUS DECENTRALIZED BIOMETRICS

Where biometric readers are provided by a central party, they’re of reasonably high quality. While they might need to be cleaned and tried 2-3 times before they work, most users tolerate that much friction, and “centralized biometrics”, as I call this form factor, has gone mainstream e.g. account opening at a bank branch. However, when biometric readers are owned by end consumers, they fall in a wide spectrum of price, quality, and reliability, and “decentralized biometrics” has not gone mainstream e.g. NetBanking. Interestingly, India’s Aadhaar identification program, which is based on biometrics, has extremely wide adoption. Banks use their own fingerprint reader and use biometric identification to open accounts at branch. However, during digital account opening and ongoing login to NetBanking by end customers, they eschew biometrics and resort to password and / or OTP, which are deterministic and reliable. If decentralized biometrics maxis advocate that end users should pay top $ to buy devices with high quality fingerprint reader just so that they can use fingerprint instead of password / OTP, that’s not how consumers behave – instead they simply reject the flaky biometrics they have and move on.

Other examples of stochastic systems include credit card fraud detection, COVID-19 testing, etc.

A tell tale sign of a probabilistic system is it will be accompanied with a certain False Positive rate. Such a system is useful when it exhibits a True Positive and True Negative and useless when it exhibits a False Positive and False Negative. See False Positive Primer for more details.

Unreliable behavior is a feature, not bug, of stochastic systems. In the specific case of a Large Language Model that undergirds Gen AI / ChatGPT, LLMs generate responses by randomly sampling words based in part on probabilities. Their ‘language’ consists of numbers that map to tokens.

That’s why they give different answers to the same question at different times.

As Sam Altman, founder and CEO of OpenAI, told FORTUNE magazine recently,

Training chat models might be more similar to training puppies – they don’t always respond the same.

Should we reject AI because it’s inconsistent?

Should we reject AI because it’s inconsistent?

Unfortunately we can’t. Because, according to Gödel Incompleteness Theorems of mathematical logic, any system can be either consistent or complete, but not both. If you go about rejecting a system because it’s inconsistent, you’d have to reject virtually every system on the planet. While J6P has a fair expectation of consistency, fact is, if he wants completeness, has has to accept inconsistency, and vice versa.

That said, we can’t accept every unreliable system, either.

Where do we draw the line? How should we evolve heuristics for accepting or rejecting AI systems?

Unlike a deterministic system that is right 100% of the time, a probabilistic system is right less than 100% of the time (let’s say X% of the time, where X < 100%).

Is there a way to estimate X before running an AI model?

Unfortunately, there isn’t. According to AI experts, Church’s Theorem and other standard methods to judge the reliability of probabilistic systems don’t work for AI / ML.

The real dilemma of so-called “AI” – more accurately known as neural net – computation is two fold. First it is not algorithmic – it does not compute by discrete symbols corresponding to step-by-step assertions / axioms. Entscheidungsproblem does not apply. Formal decidabilty and Church’s theorem do not apply. We really don’t have a branch of mathematics that deals with neural net computation – we are flying blind without a clue where the mountains are. Secondly, neural nets inductively self-adjust to a programmatic space (weights and bias and any other parameters relevant to the specific architecture) whose meaning is basically unfathomable at this time. So there is no algorithmic or behavioral definition against which to test. The host of testing heuristics which do a much improved job of testing (code coverage, boundary conditions, model-based testing) do not apply. Even where practical, proof of program correctness does not apply (generally cannot be verified but specific ones can be). So we accept these “AI” thingees sort of work, mainly when the self-interested take very small samples of infinite behavior to sell everyone from the FDA to grandma that they are ready to go. – Robert DuWors.

In the absence of an ex-ante approach to approve or reject AI, I propose an ex-post approach. Called the portfolio approach, it’s used in marketing, investment banking, and venture capital. In this approach, we judge AI by its outputs / outcomes rather than inputs. (Inputs are like individual campaigns, trades and investments that are not right 100% of the time.)

Here, we run an AI model, and enumerate the gains when it’s right (P) and losses when it’s wrong (Q).

A first cut success metric would be P > Q. In other words,

Accept the system if P > Q. Reject it otherwise.

Put in plain English, we accept an AI model as long as long as its gains when it’s right exceed its losses when it’s wrong.

LLM is not supposed to interpret reality and summarize it accurately; it's in the business of completing texts in plausible ways. That's a risky approach to financial modeling but an incredibly efficient way to summarize meetings etc. @matt_levine. #AI #ChatGPT

— Ketharaman Swaminathan (@s_ketharaman) March 6, 2024

To understand the notion of gains and losses when right and wrong respectively, let’s take the example of Credit Card Fraud Detection. Used by many credit card issuer banks, this probabilistic system sniffs every credit card transaction and judges whether it’s genine or fraudulent. As you can see at False Positive Primer, this system is not right all the time, ergo we have false declines.

Algorithmic trading firms mint billionaires by being right 51% of the time – @matt_levine.

- When the algorithm is right, it detects fraud correctly, helps the issuer to stop the payment and ensuing fraud, and thereby enhances customer experience and save chargeback costs – which are gains.

- When the algorithm is wrong, it detects fraud wrongly, makes the issuer decline the payment, and thereby lose revenue and annoy the customer – which are losses.

If we use the term Break Even Point to denote the point at which gains exceed losses, it would appear that X is quite high for the above system – maybe as high as 99%. BEP could occur at other values of X for other systems e.g. 51% for algorithmic trading, 70% for AI and 90% for venture capital investments.

On a lighter note, ERP customers apparently define success as whatever they get when they go live!

%age of Cos that rated their ERP project a success:

* 2015: 58%

* 2019: 88%.Key Reason for Sharp Increase: "To avoid reputational damage coming from failure, cos redefine success as whatever they get." Now you know why aspirational selling works. https://t.co/fKX1hjD4t1

— GTM360 (@GTM360) April 16, 2020

I can vouch for this from personal experience. Before starting the implementation, customers expect to know high falutin’ things like production cost of each item in each production facility. When they go live, they rejoice that they can print an invoice.

I hope that never happens in the case of AI! But I fear it will: AI may not be all that immune to the Third Law of Software Marketing:

Make the customer want “best” before the purchase and settle for “good enough” after the purchase!!!

UPDATE DATED 20 JUNE 2024:

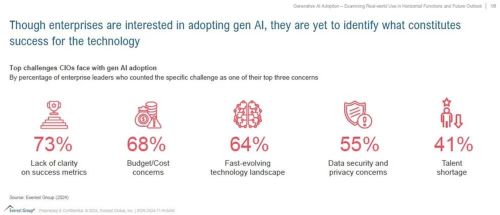

According to FORTUNE magazine’s CIO INTELLIGENCE newsletter dated 19 June 2024, the biggest hurdle in the adoption of Generative AI in enterprises is “Lack of clarity on success metrics”.

Well, if they read this post, they’d be sorted!