Artificial intelligence is promising to disrupt a wide array of legacy practices. – Dan Primack, Axios PRO Rata.

Based on my firsthand experience with the AI tsunami over the past 9-10 months, I can wholeheartedly get behind the above prediction.

Based on my firsthand experience with the AI tsunami over the past 9-10 months, I can wholeheartedly get behind the above prediction.

In fact, I’ve already noticed 10 revolutionary things aka gamechangers about ChatGPT and other GenAI platforms compared to ERP, Internet, Search, Low Code / No Code, Mobility, Cloud, Blockchain, and other disruptive technologies of the past 35+ years.

With 10X the market share of the second most popular product, ChatGPT is a fair proxy for the entire Generative Artifical Intelligence sub-industry. Therefore, for the sake of convenience, I’ll use the term ChatGPT as a catch-all for all LLM products including Google Gemini, Anthropic Claude, etc. (Where ChatGPT / OpenAI materially differs from the other LLMs and their owners, I’ll highlight that separately.)

Monthly active users on top AI sites:

ChatGPT — 200M

Gemini — 20M

CharacterAI — 13M

Poe — 11M

Perplexity — 10M

HuggingFace — 6.4M

ElevenLabs — 6.3M

Leonardo — 4.7M

CivitAI — 4.1MPlenty of surprises here (Poe!) but its also so clear how far AI tools are from mainstream!

— Deedy (@deedydas) March 18, 2024

I’ll cover five game changers in this first part of this two part blog post.

Here goes.

1. Zero Time-to-Value

ChatGPT is the only software that generates output without any implementation. No master data entry, no data migration, no training, no implementation, no nothing. Just sign up, type your prompt, hit ENTER, and get results instantly. That’s because the “P” in GPT stands for “pretrained” i.e. GPT already has tons of data on which it’s it’s pretrained.

This is in sharp contrast with ERP, CRM, Marketing Automation, Core Banking System, Customer Engagement Management and any other OLTP system of record, which needs extensive implementation before it begins to deliver value. (Ditto OLAP, just that the implementation efforts there are focused on data cleansing, ETL, data model building, and other activities.)

This is in sharp contrast with ERP, CRM, Marketing Automation, Core Banking System, Customer Engagement Management and any other OLTP system of record, which needs extensive implementation before it begins to deliver value. (Ditto OLAP, just that the implementation efforts there are focused on data cleansing, ETL, data model building, and other activities.)

If an LLM is trained on your website, its output will be customized for you. If not, the LLM platform will need to be paired with RAG (Retrieval Augmented Generation) in order to generate customized answers. According to Tirthankar Lahiri, SVP Data & In Memory Technologies of Oracle (Disclosure: Oracle is ex-employer), even RAG might not require data scientists, data migration, or any other form of implementation.

As a result, ChatGPT has the highest Speed-to-Value of any technology I know.

Let alone ERP, CRM, etc., GPT even beats other sub-categories of AI / probabilistic systems on speed to value. That’s because the latter systems need adequate data about your digital properties before their results are statistically significant. Depending on your website / app traffic, that could take 2-4 weeks, as we learned from our firsthand experience with a B2B Lookalike Audience Campaign software and a Website A/B Testing platform.

2. Zany Acceptance Heuristics

In the past, software acceptance followed the standard playbook: Run test cases, test output against expected results, PASS the software if its output matches expected results, FAIL the software otherwise.

This will not work on AI. By their very nature, ChatGPT and other LLMs are probabilistic systems and will give different answers to the same question at different times. So GenAI cannot be tested using the conventional playbook. In Evolving Heuristics For Acceptance Of AI Systems, we provide an alternative approach based on portfolio management theory for accepting / rejecting LLMs.

3. Data Is Finally The New Oil For Data Owners

The meme “Data is the new oil” has been doing the rounds for at least 10 years.

Five years ago, Shopin and a few other startups offered cash for consumer shopping data. When WIRED reporter Gregory J. Barber tried to cash in his personal shopping history, he discovered that all he got was peanuts.

Based on this datapoint of one, I jumped to the conclusion that data is the new oil only for Google, Facebook / Meta and other ad techs that run targeted ads on top of it (but not for the guy to whom the data belongs). Since we haven’t heard any stories of individuals becoming generationally rich by selling their personal data, my knee jerk decision has aged well.

GenAI might change that. In the last three months or so, Reddit, Axel Springer, and a few other publishers have reported data deals with OpenAI, Google and a string of other Gen AI companies. For example, Reddit stands to earn $200M a year by letting LLMs train on its data.

Going by these, I’m tempted to declare that data is finally the new oil – for data owners. Except for a slight dampener in the form of the the lawsuit filed by New York Times against OpenAI, alleging that the AI major is trying to “get away with offering NYT – ahem – peanuts” for its data!

On a side note, sovereign funds from Saudi Arabia and United Arab Emirates are reported to be in talks to invest hundreds of billions of dollars in Sam Altman’s AI chip venture, so Middle East, which is already the Big Daddy of traditional oil, may also become the Big Daddy of modern oil!

If data is the new oil, expect the Middle East to become an undeniable player in the AI boom.https://t.co/Trpx5OEZa0 #axiosprorata.

Middle East is the undeniable Big Daddy in both old oil and new oil.— Ketharaman Swaminathan (@s_ketharaman) March 17, 2024

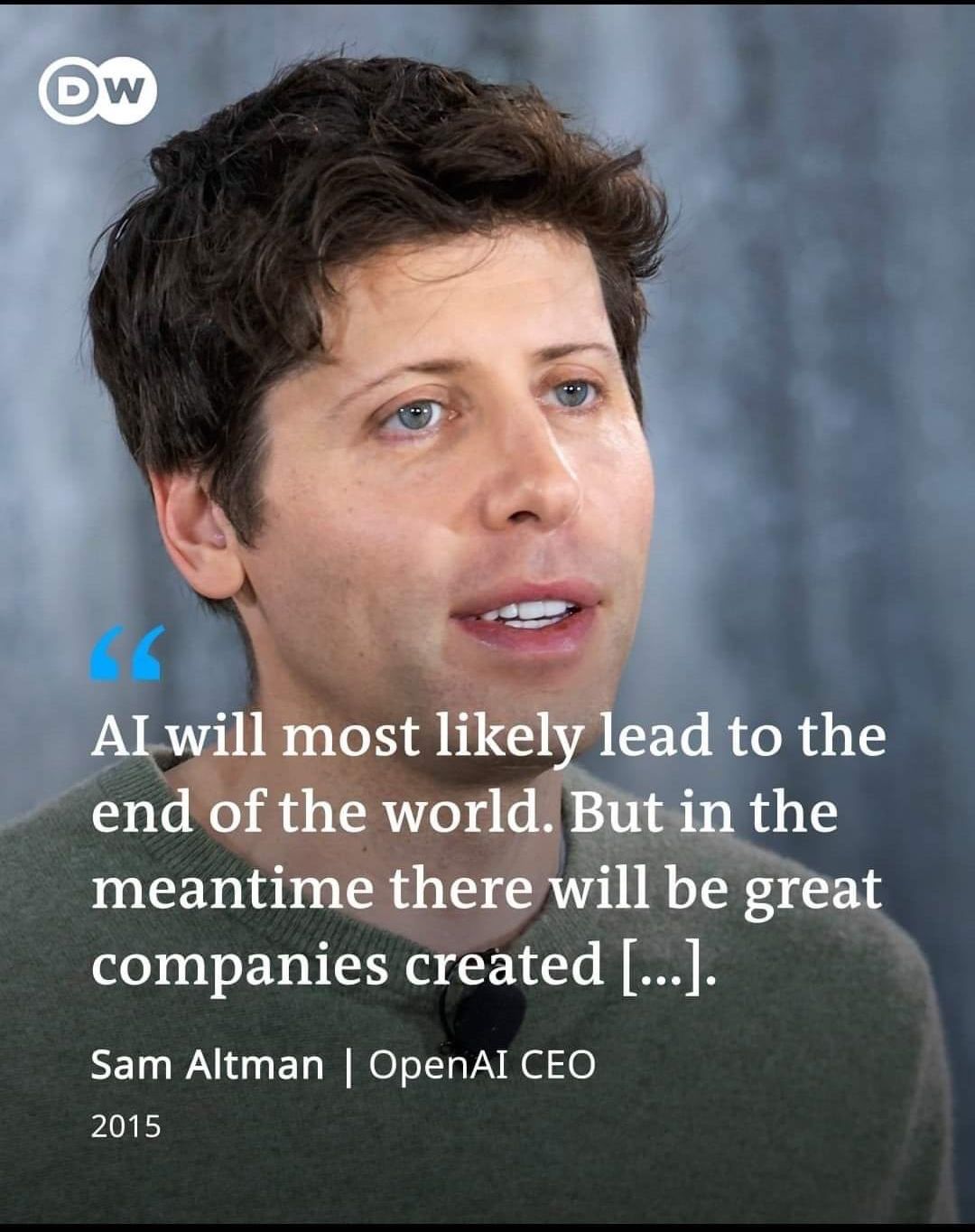

4. Talk Book & Spread FUD – At The Same Time

I have not come across a single technology whose de defacto chief spokesperson talks up the technology so much on the one hand and spreads massive FUD (Fear Uncertainty Doubt) about it on the other, both within the span of a few hours.

I have not come across a single technology whose de defacto chief spokesperson talks up the technology so much on the one hand and spreads massive FUD (Fear Uncertainty Doubt) about it on the other, both within the span of a few hours.

Sam Altman’s see-sawing about GenAI / LLM is unparalelled.

5. Makes GIGO Obsolete

OpenAI / GPT is trained on data scraped from billions of websites. Owners of those websites were not given advance notice that their data was going to be slurped by OpenAI or otherwise told to prep their data in any form, so it’s safe to assume that there was no assurance of the quality of data ingested by GPT.

In other words, the data could have been garbage for all we know. Still ChatGPT largely delivers non-garbage output.

Therefore the old wisdom Garbage In Garbage Out, which applies to all softwares in the past, does not apply to GPT.

I speculate that LLMs have managed to subvent GIGO by using one or more of the following techniques:

- Bundling a data-cleanser with their other modules

- Using third party data sourced from e.g. data brokers to enrich the data supplied by their customers (first party data). Accordingly, even if the first party data has an error or omission, LLMs can fix it e.g. If first party data contains an address like, say, “75 Meridian Place, Off Marsh Wall, SW17 6FF, London, UK”, AI can spot the error in the postal code and correct it to “E14 9FF”.

As LLMs become more advanced over time, I expect that they will become more and more resilient to bad quality data. Ergo I predict that GIGO will become obsolete in the forseeable future.

In Part 2, I will cover five more revolutionary things about AI. Watch this space!