We saw three illustrations of False Positive in the first part of False Positive Primer. They were:

- Credit Card Fraud Detection & Prevention

- Covid-19 Test

- “Cry Wolf” Syndrome

In this second part, we take up three more examples of this concept in statistics.

#4. EMAIL SPAM FILTER

Have you ever found your emails suddenly landing up in somebody’s spam folder after months or even years of being delivered correctly to their inbox?

I face this problem regularly when the receipient uses Gmail or a corporate email system that uses Google Mail as its Email Service Provider.

To understand why this happens, let’s dig deeply into spam filter technology.

Every Mail Server software has a spam filter, which decides whether to tag an email as spam or kosher. A typical spam filter hoovers up a myriad of data about incoming emails such as IP address, time of day, frequency of emails sent, country of origin, and computes a spam index for each email based on these parameters. If the spam index exceeds a certain preset value (“threshold”), the spam filter tags the email as spam and delivers it to the spam folder. Emails with spam index below the threshold are judged kosher and delivered to the inbox.

The list of parameters, the algorithm to compute the spam index and the threshold – these are all proprietary to the specific mail server software / spam filter. They may also change from time to time depending on the volume of emails. Ergo, what got delivered to inbox for five years can suddenly get delivered to spam folder tomorrow.

The four states of spam filter are as follows:

- Email is Genuine. Spam Filter calls it Spam and delivers it to Spam folder. False Positive

- Email is Genuine. Spam Filter does not call it Spam and delivers it to Inbox. True Negative

- Email is Spam. Spam Filter calls it Spam and delivers it to Spam folder. True Positive

- Email is Spam. Spam Filter does not call it Spam and it delivers to Inbox. False Negative

False Positive Strikes Again! I never installed any adblocker but @Forbes thinks otherwise. https://t.co/nQwdDNP9mw

— Ketharaman Swaminathan (@s_ketharaman) February 9, 2016

#5. CLICK FRAUD

Click fraud is when you click on ads on your own website.

Suppose you own a website. To monetize it, you host digital ads via Google AdSense program. Everytime a visitor on your website clicks on an ad, the advertiser pays a certain amount (cost per click) to Google and Google pays you a certain portion of the CPC (typically 70%) for hosting the ad.

Google AdSense has spawned a huge cottage industry around fake clicks generated by thousands of people working in so-called click farms who blindly click ads on millions of websites. While the website owner makes money for nothing, the advertiser’s ad spend is wasted.

Clicking the the Google Ads on your own website does not break any law of the land aka it's not illegal. But Google has every right to blacklist your AdSense account for Click Fraud. #PayTM #Google #PlayStore #Cashback #SportsBetting

— Ketharaman Swaminathan (@s_ketharaman) October 8, 2020

Over time, click fraud would undermine advertisers’ confidence in Google Ads. Therefore Google comes down heavily on click fraud and refunds PPC clicks caused by fraudulent clicks.

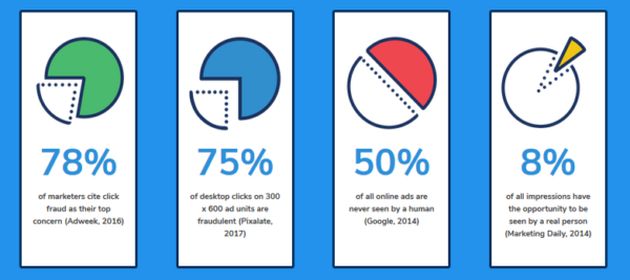

The search ad giant uses proprietary technology to ferret out fraudulent clicks based on IP address, click frequency, time of day, and other parameters. While not much is known in the public domain about this technology, there’s enough reason to believe that it’s non-deterministic / stochastic. Like the other stochastic models we have seen so far, click fraud detection is subject to the following four states:

- Click is Genuine. Google calls it Click Fraud and refunds Advertiser. False Positive. Google loses revenue. Advertiser gains undeserved rebate on ad spend.

- Click is Genuine. Google does not call it Click Fraud and does not refund Advertiser. True Negative. Both Google and Advertiser are happy.

- Click is Fraud. Google calls it Click Fraud and refunds Advertiser. True Positive. Google does not earn revenue. Advertiser gets well deserved rebate on ad spend.

- Click is Fraud. Google does not call it Click Fraud and does not refund Advertiser. False Negative. Google gets undeserved revenue. Advertiser is cheated on ad spend.

Almost all of Google owner Alphabet’s revenues come from ads. Click fraud amounts to around 15% of all clicks. Which means that Google can dial up or dial down its revenues by 15% simply by playing around with the settings of its click fraud algorithm. This is a very enviable position to be in for any publicly traded company whose stock price is governed by Wall Street gyrations driven by EPS estimates versus actuals.

#6. BOILER EXPLOSION

Too many False Positives can often lead to neglecting of a False Negative when it happens. In industrial scenarios, that can prove catastrophic.

Take this textile manufacturer for example.

The boiler in its mill had a high temperature sensor. If the boiler overheated, the sensor would set off an alarm and shut it down by cutting off its fuel supply (“trip”), thus preventing an explosion.

Over the years, the alarm went off frequently but detailed investigations uncovered no overheating (False Positive). As a result, plant operators became fatigued with the sensor’s behavior and disabled its alarm-cum-tripping feature.

Over the years, the alarm went off frequently but detailed investigations uncovered no overheating (False Positive). As a result, plant operators became fatigued with the sensor’s behavior and disabled its alarm-cum-tripping feature.

Then, on one not-so-fine morning, the boiler actually overheated. Since the sensor was disabled, there was no alarm and the boiler didn’t shut down (False Negative). The boiler continued to overheat and eventually exploded, killing many people in the factory.

This tragic incident is a nod to the following passage in the research paper entitled A FOCUSED, CONTEXT-SENSITIVE. APPROACH TO MONITORING, authored by Richard J. Doyle, et al, of Jet Propulsion Laboratory, California Institute of Technology (CalTech).

Studies of plant catastrophes have revealed that information which might have been useful in preventing disaster was typically available … in the data presented to operators.

Just that, in this case, the available information was tuned off by the plant operators.

Now, all of us keep measuring temperature accurately with a household thermometer. Thermal scanning devices have become commonplace during the current coronovirus pandemic outbreak. The natural question that arises is, why should there be any false positives in the measurement of a mundane parameter like temperature?

The answer to that question lies in the fact that temperature is an easy-to-measure variable only in an isolated context.

In a boiler, there are multiple variables and sensors to measure them. The overall model that makes sense of the readings, works out their interdependencies and takes actions therefrom is quite complex. As highlighted in the above paper:

The traditional approach to verifying the correct operation of a system being monitored involves associating alarm thresholds with sensors. Fixed threshold values for each sensor are determined ahead of time by analyzing the designed nominal behavior for the system. Whenever a sensor value crosses a threshold during operation, an alarm is raised. Fixed alarm thresholds are useful for defining the operating limits of a physical (point?) system, such as… the temperature at which… the onboard computer of a spacecraft (or boiler?) is at risk. Nonetheless, they are woefully inadequate for verifying the nominal operation of a system with many operating modes… The problem is that fixed alarm thresholds are derived from an over-summarized model of the behavior of a system. If the thresholds are chosen conservatively, then false alarms occur. If they are chosen boldly, then undetected anomalies occur. (emphasis mine)

"In reality, not all sensors can or should be checked all of the time. We must let go of the notion of comprehensive monitoring" ~ https://t.co/6KVYXbPtEi via CalTech JPL.

Science realizes what Business has known all along: You can't gather all available evidence.

— Ketharaman Swaminathan (@s_ketharaman) December 9, 2020

Because of the complexity in the model, temperature exhibits False Positive and False Negative tendencies in a boiler – eventhough it behaves in a straightforward manner in commonplace situations.

Between the first and second parts of this primer, we have discussed six cases of False Positives. I’m sure many of you will have more examples. Please feel free to share them in the comments below.